SHREC 2016 - 3D Sketch-Based 3D Shape Retrieval

Call For Participation

SHREC 2016 - 3D Sketch-Based 3D Shape Retrieval

Objective

The objective of this track is to evaluate the performance of different 3D sketch-based 3D model retrieval algorithms using a hand-drawn 3D sketch query dataset on a generic 3D model dataset.

Introduction

Sketch-based 3D model retrieval is to retrieve relevant 3D models using sketch(es) as input. This scheme is intuitive and convenient for users to learn and search for 3D models. It is also popular and important for related applications such as sketch-based modeling and recognition.

However, existing sketch-based 3D model retrieval systems are mainly based on 2D sketch queries which contain limited 3D information of the 3D shapes they represent. What's more, there is a semantic gap between the iconic representation of 2D sketches and the accurate 3D coordinate representations of 3D models. This makes the task of retrieval using 2D sketch queries much more challenging than those using 3D model queries.

Motivated by the above obstacles, an interesting question has been raised: "why not 3D sketches?" A 3D sketch may provide a better description for an object than a 2D sketch, which not only encodes 3D information (such as depth and features of more facets) of objects, but also contains the salient 3D feature lines of its counterpart of 3D models.

The popularity of low-cost depth cameras like Microsoft's Kinect makes 3D sketching in a virtual 3D space is no longer a dream. Kinect facilitates us to track the 3D locations of 20 joints of a human body. Therefore, a Kinect sensor can be used to track the 3D locations of a user's hand to create a 3D sketch.

In 2015, a Kinect-based 3D sketching system [1, 2] is developed to allow a user to use his/her hand as a drawing tool to draw a 3D sketch. A voice-activated Graphical User Interface (GUI) is designed to facilitate 3D sketching. Based on the Kinect-based 3D sketching system, we have collected a Kinect300 3D sketch dataset, which comprises 300 sketches of 30 classes, each with 10 models, from 17 users (4 females and 13 males) in computer science or mathematics related majors. The average age of all the 17 users is 21, and only two males have art experiences.

Based on this new benchmark, we organize this track to further foster this challenging research area by soliciting retrieval results from current State-of-The-Art 3D model retrieval methods for comparison, especially in terms of scalability to 3D sketch queries. We will also provide corresponding evaluation code for computing a set of performance metrics similar to those used in the Query-by-Model retrieval technique.

Benchmark Overview

Our 3D sketch-based 3D model retrieval benchmark is motivated by a 3D sketch collection built by Li and Lu et al. [1, 2] and SHREC'13 Sketch Track Benchmark (SHREC13STB) [3].

To explore how to draw 3D sketches in a 3D space and how to use a hand-drawn 3D sketch to search similar 3D models, Li and Lu et al. [1, 2] collected 300 human-drawn 3D sketches of 30 classes, each with 10 sketches by utilizing a Kinect-based virtual 3D drawing system. It avoids the bias issue since they collected the same number of sketches for every class, while the sketch variation within one class is also adequate enough.

To facilitate learning-based retrieval, we randomly select 7 sketches from each class for training and use the remained 3 sketches per class for testing, while all the target models as a whole are remained as the target dataset. Participants need to submit results on the training and testing datasets, respectively, if they use learning in their approach(es). Otherwise, only the retrieval results based on the complete query dataset are needed. To provide a complete reference for the future users of our benchmark, we will evaluate the participating algorithms on both the testing dataset (3 sketches per class, totally 90 3D sketches) and the complete benchmark (10 sketches per class, 300 sketches).

3D Sketch Dataset

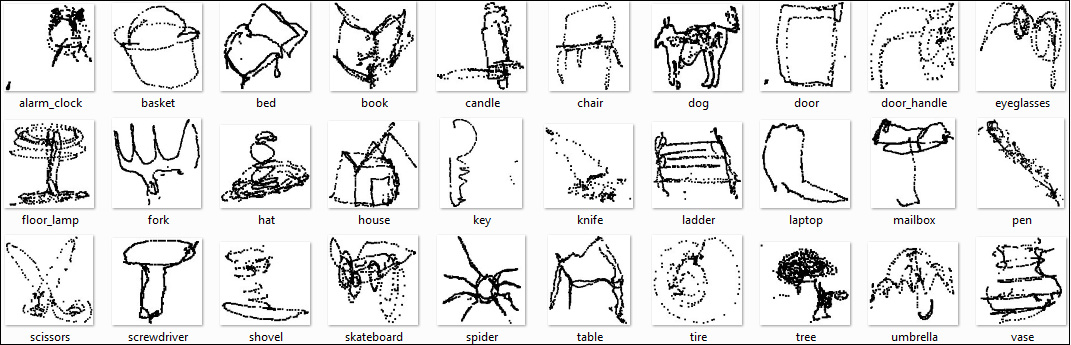

The 3D sketch query set comprises 300 3D sketches (30 classes, each with 10 sketches), while 21 classes have relevant models in the target 3D dataset of the SHREC'13 Sketch Track Benchmark (SHREC13STB) [3]. One example per class is demonstrated in Fig. 1.

3D Model Dataset

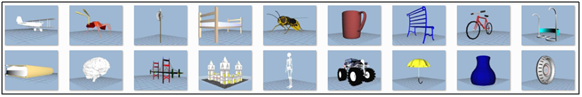

The 3D benchmark dataset is built on the targe 3D model dataset of the SHREC'13 Sketch Track Benchmark (SHREC13STB) [3]. Totally, 1258 models of 90 classes are selected to form the target 3D model dataset. We use this dataset as our target 3D model dataset. Some examples are shown in Fig. 2.

Evaluation Method

To have a comprehensive evaluation of the retrieval algorithm, we employ seven commonly adopted performance metrics in 3D model retrieval technique. They are Precision-Recall (PR) diagram, Nearest Neighbor (NN), First Tier (FT), Second Tier (ST), E-Measures (E), Discounted Cumulated Gain (DCG) and Average Precision (AP). We also have developed the code to compute them.

The Procedural Aspects

The complete dataset will be made available on the 29th of January and the results will be due in one month after that. Every participant will perform the queries and send us their retrieval results. We will then do the performance assessment. Participants and organizers will write a joint contest report to detail the results. Results of the track will be presented during the 3DOR workshop 2016 in Lisbon, Portugal.

Procedure

The following list is a step-by-step description of the activities:

- The participants must register by sending a message to li.bo.ntu0@gmail.com and Bo Li. Early registration is encouraged, so that we get an impression of the number of participants at an early stage.

- The database will be made available via this website. Dataset.

- Participants will submit the dissimilarity matrix (also named as distance matrix) for both training and testing datasets. Up to 5 matrices, either for the training or testing datasets, per group may be submitted, resulting from different runs. Each run may be a different algorithm, or a different parameter setting. More information on the dissimilarity matrix file format. More information on the dissimilarity matrix file format.

- Participants write a one-page description of their method with at most two figures and submit it at the same time when they submit their running results.

- The evaluations will be done automatically.

- The organization will release the evaluation scores of all the runs.

- The track results are combined into a joint paper, and then published in the proceedings of the Eurographics Workshop on 3D Object Retrieval after review by the 3DOR and SHREC organizers.

- The description of the track and its results are presented at the 2016 Eurographics Workshop on 3D Object Retrieval (May 7-8, 2016).

Schedule

| January 8 | - Call for participation. |

| January 15 | - A few sample 3D sketches and target models will be available online. |

| January 22 | - Please register before this date. |

| January 29 | - Distribution of the database. Participants can start the retrieval or train their algorithms. |

| February 29 | - Submission the results on the complete dataset and a one-page description of their method(s). |

| March 4 | - Distribution of relevance judgments and evaluation scores. |

| March 7 | - Track is finished and results are ready for inclusion in a track report. |

| March 15 | - Submit the track report for review. |

| March 22 | - All reviews due, feedback and notifications. |

| April 1 | - Camera-ready track paper submitted for inclusion in the proceedings. |

| May 7-8 | - Eurographics Workshop on 3D Object Retrieval including SHREC'2016. |

Organizers

Bo Li - University of Central Missouri, USA

Yijuan Lu - Texas State University, USA

References

[1] Bo Li, Yijuan Lu and et al., 3D Sketch-Based 3D Model Retrieval, Short Paper, Annual ACM International Conference on Multimedia Retrieval (ICMR), 555-558, 2015

[2] Bo Li, Yijuan Lu and et al., KinectSBR: A Kinect-Assisted 3D Sketch-Based 3D Model Retrieval System, Demo Paper, Annual ACM International Conference on Multimedia Retrieval (ICMR), 655-656, 2015

[3] Bo Li, Yijuan Lu, Afzal Godil, Tobias Schreck, Masaki Aono, Henry Johan, Jose M. Saavedra, Shoki Tashiro SHREC'13 Track: Large Scale Sketch-Based 3D Shape Retrieval 3DOR 2013, pp. 89-96, 2013

Please cite the paper:

Bo Li, Yijuan Lu, Fuqing Duan, Shuilong Dong, Yachun Fan, Lu Qian, Hamid Laga, Haisheng Li, Yuxiang Li, Peng Liu, Maks Ovsjanikov, Hedi Tabia, Yuxiang Ye, Huanpu Yin,

Ziyu Xu, In: A. Ferreira and A. Giachetti and D. Giorgi (eds.), SHREC'16 Track: 3D Sketch-Based 3D Shape Retrieval, Eurographics Workshop on 3D Object Retrieval 2016 (3DOR 2016): 47-54, Lisbon, Portugal, May 7-8, 2016 (PDF, Slides) Bibtex.