SHREC 2014 - Large Scale Comprehensive 3D Shape Retrieval

CVIU journal information

We published an extended CVIU journal based on SHREC'14 Sketch Track and Comprehensive Track! Please see the CVIU paper on the bottom.

Objective

The objective of this track is to evaluate the performance of 3D shape retrieval approaches on a

large-sale comprehensive 3D shape database which contains different types of models, such as generic,

articulated, CAD and architecture models.

Introduction

With the increasing number of 3D models created every day and stored in databases, the development of

effective and scalable 3D search algorithms has become an important research area. In this contest, the task

will be retrieving 3D models similar to a complete 3D model query from a new integrated large-scale

comprehensive 3D shape benchmark including various types of models. Owing to the integration of the

most important existing benchmarks to date, the newly created benchmark is the most exhaustive to date in

terms of the number of semantic query categories covered, as well as the variations of model types. The

shape retrieval contest will allow researchers to evaluate results of different 3D shape retrieval approaches

when applied on a large scale comprehensive 3D database.

The benchmark is motivated by a latest large collection of human sketches built by Eitz et al. [1]. To explore how human draw sketches and human sketch recognition, they collected 20,000 human-drawn sketches, categorized into 250 classes, each with 80 sketches. This sketch dataset is exhaustive in terms of the number of object categories. Thus, we believe that a 3D model retrieval benchmark based on their object categorizations will be more comprehensive and appropriate than currently available 3D retrieval benchmarks to more objectively and accurately evaluate the real practical performance of a comprehensive 3D model retrieval algorithm if implemented and used in the real world.

Considering this, we build a SHREC'14 Large Scale Comprehensive Track Benchmark (SHREC14LSGTB) by collecting relevant models in the major previously proposed 3D object retrieval benchmarks. Our target is to find models for as many as classes of the 250 classes and find as many as models for each class. These previous benchmarks have been compiled with different goals in mind and to date, not been considered in their sum. Our work is the first to integrate them to form a new, larger benchmark corpus for comprehensive 3D shape retrieval.

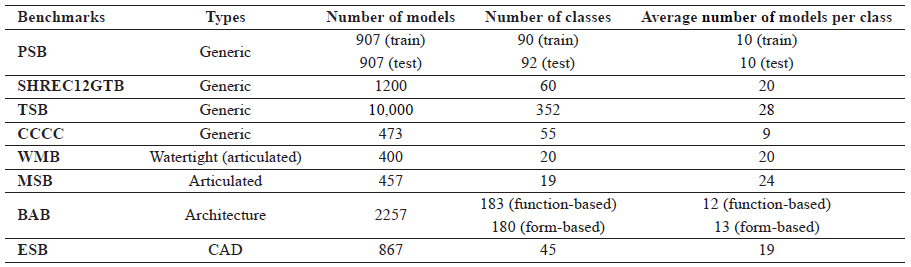

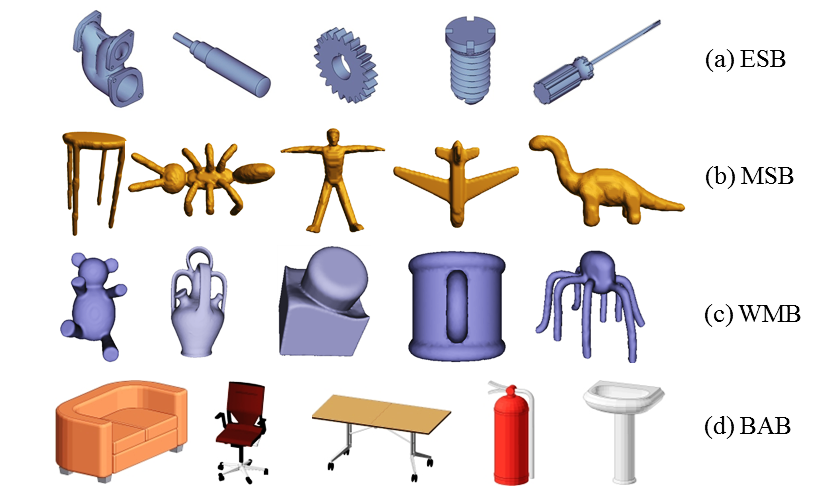

To avoid adding replicate models, we select following 8 benchmarks: the Princeton Shape Benchmark (PSB) [2], the SHREC�12 Generic Track Benchmark (SHREC12GTB) [3], the Toyohashi Shape Benchmark (TSB) [4], the Konstanz 3D Model Benchmark (CCCC) [5], the Watertight Model Benchmark (WMB) [6], the McGill 3D Shape Benchmark (MSB) [7], the Bonn's Architecture Benchmark (BAB) [8], and the Engineering Shape Benchmark (ESB) [9]. Table 1 lists their basic classification information while Fig. 1 shows some example models for the four specific benchmarks. Totally, the extended large-scale benchmark has 8,987 models, classified into 171 classes.

Based on this new challenging benchmark, we organize this track to foster this research area by soliciting retrieval results from current state-of-the-art 3D model retrieval methods for comparison, especially for practical retrieval performance. We will also provide evaluation code to compute a set of performance metrics, including those commonly used in the Query-by-Model retrieval technique.

Dataset

SHREC'14 Large Scale Comprehensive Retrieval Track Benchmark has 8,987 models, categorized into

171 classes. We adopt a voting scheme to

classify models. For each classification, we have at least two votes. If these two votes agree each other, we

confirm that the classification is correct, otherwise, we perform a third vote to finalize the classification.

All the models are categorized according to the classifications in Eitz et al. [1], based on visual similarity.

Evaluation Method

To have a comprehensive evaluation of the retrieval algorithm, we employ seven commonly adopted

performance metrics in 3D model retrieval technique. They are Precision-Recall (PR) graph, Nearest

Neighbor (NN), First Tier (FT), Second Tier (ST), E-Measures (E), Discounted Cumulated Gain (DCG)

and Average Precision (AP). We also have developed the code to compute them. Besides the commonly

definitions, we also develop weighted variation for each benchmark by incorporating the popularity of each

class in our real work and life. Basically, we base on the number of available models to define the

popularity. We assume there is a linear correlation between the number of available models in one class

and the degree of popularity of the class. Therefore, we adopt a weight of reciprocal of the number of

models to define each weighted performance metric.

The Procedural Aspects

- The complete dataset will be made available on the 17th of January and the results will be due one month after that. Every participant will perform the queries and send us their retrieval results. We will then do the performance assessment. Participants and organizers will write a joint contest report to detail the results. Results of the track will be presented during the 3DOR workshop 2014 in Strasbourg, France.

- The participants must register by sending a message to shrec@nist.gov. Early registration is encouraged.

- Participants will submit their results up to 5 matrices, resulting from different runs. Each run can be a different algorithm, or a different parameter setting. More information on the dissimilarity matrix file format.

Timeline

| January 1 | - Call for participation. |

| January 10 | - Few sample models will be available on line. |

| January 15 | - Please register before this date. |

| January 17 | - Distribution of the database. |

| February 17 | - Submission the results on the complete dataset and a one page description of their method(s). | February 20 | - Distribution of evaluation results. |

| February 22 | - Track is finished, and results are ready for inclusion in a track report. |

| February 25 | - Submit the track paper for review. |

| March 1 | - All reviews due, feedback and notifications. |

| March 6 | - Camera ready track paper submitted for inclusion in the proceedings. |

| April 6 | - Eurographics Workshop on 3D Object Retrieval including SHREC 2014. |

Organizers

Bo Li, Yijuan Lu - Texas State University, USA Chunyuan Li, Afzal Godil - National Institute of Standards and Technology, USA

Tobias Schreck, - University of Konstanz, Germany

Acknowledgements

We would like to thank Mathias Eitz, James Hays and Marc Alexa who collected the 250 classes of

sketches. We would also like to thank following authors for building the 3D benchmarks:

- Philip Shilane, Patrick Min, Michael M. Kazhdan, Thomas A. Funkhouser who built the Princeton Shape Benchmark (PSB);

- Atsushi Tatsuma, Hitoshi Koyanagi, Masaki Aono who built the Toyohashi Shape Benchmark(TSB);

- Dejan Vranic and colleagues who built the Konstanz 3D Model Benchmark (CCCC);

- Daniela Giorgi who built the Watertight Shape Benchmark (WMB);

- Siddiqi K, Zhang J, Macrini D, Shokoufandeh A, Bouix S, Dickinson SJ who built the McGill 3D Shape Benchmark (MSB);

- Raoul Wessel, Ina Bl�mel, Reinhard Klein, University of Bonn, who built the Bonn's Architecture Benchmark (BAB);

- Subramaniam Jayanti, Yagnanarayanan Kalyanaraman, Natraj Iyer, Karthik Ramani who built the Engineering Shape Benchmark (ESB).

References

[1] Mathias Eitz, James Hays, Marc Alexa, How do humans sketch objects? ACM Trans. Graph. 31(4): 44,

2012

[2] Philip Shilane, Patrick Min, Michael M. Kazhdan, Thomas A. Funkhouser, The Princeton Shape Benchmark, SMI 2004, pp. 167-178, 2004

[3] Bo Li, Afzal Godil, Masaki Aono, X. Bai, Takahiko Furuya, L. Li, Roberto Javier L�pez-Sastre, Henry Johan, Ryutarou Ohbuchi, Carolina Redondo-Cabrera, Atsushi Tatsuma, Tomohiro Yanagimachi, S. Zhang: SHREC'12 Track: Generic 3D Shape Retrieval. 3DOR 2012: 119-126, 2012

[4]Atsushi Tatsuma, Hitoshi Koyanagi, Masaki Aono, A Large-Scale Shape Benchmark for 3D Object Retrieval: Toyohashi Shape Benchmark, In Proc. of 2012 Asia Pacific Signal and Information Processing Association (APSIPA2012), Hollywood, California, USA, 2012

[5] Vranic Dejan. 3D model retrieval. PhD thesis, University of Leipzig, 2004

[6] Veltkamp R. C., Ter Harr F. B.: SHREC 2007 3D Retrieval Contest. Technical Report UU-CS-2007-015, Department of Information and Computing Sciences, Utrecht University, 2007

[7] Siddiqi K, Zhang J, Macrini D, Shokoufandeh A, Bouix S, Dickinson SJ (2008) Retrieving articulated 3-D models using medial surfaces. Mach Vis Appl 19(4):261�275

[8] Raoul Wessel, Ina Bl�mel, Reinhard Klein: A 3D Shape Benchmark for Retrieval and Automatic Classification of Architectural Data. 3DOR 2009: 53-56

[9] Subramaniam Jayanti, Yagnanarayanan Kalyanaraman, Natraj Iyer, Karthik Ramani: Developing an engineering shape benchmark for CAD models. Computer-Aided Design 38(9): 939-953 (2006)

Please cite the CVIU and 3DOR'14 papers

[1] Bo Li, Yijuan Lu, Chunyuan Li, Afzal Godil, Tobias Schreck, Masaki Aono, Martin Burtscher, Qiang Chen, Nihad Karim Chowdhury, Bin Fang, Hongbo Fu, Takahiko Furuya, Haisheng Li, Jianzhuang Liu, Henry Johan, Ryuichi Kosaka, Hitoshi Koyanagi, Ryutarou Ohbuchi, Atsushi Tatsuma, Yajuan Wan, Chaoli Zhang, Changqing Zou. A Comparison of 3D Shape Retrieval Methods Based on a Large-scale Benchmark Supporting Multimodal Queries. Computer Vision and Image Understanding, November 4, 2014.

[2] Bo Li, Yijuan Lu, Chunyuan Li, Afzal Godil, Tobias Schreck, Masaki Aono, Qiang Chen, Nihad Karim Chowdhury, Bin Fang, Takahiko Furuya, Henry Johan, Ryuichi Kosaka, Hitoshi Koyanagi, Ryutarou Ohbuchi, Atsushi Tatsuma. SHREC' 14 Track: Large Scale Comprehensive 3D Shape Retrieval. Eurographics Workshop on 3D Object Retrieval 2014 (3DOR 2014): 131-140, 2014.