SHREC 2019 - Extended 2D Scene Sketch-Based 3D Scene Retrieval

CVIU journal information

We published an extended CVIU journal based on SHREC'19 and SHREC'18 Sketch/Image Tracks! Please see the CVIU paper on the bottom.

Objective

In the months following our SHREC'18 2D Scene Sketch-Based 3D Scene Retrieval (SceneSBR2018) Track [1], we have extended the number of the scene categories from the initial 10 classes in the SceneSBR2018 benchmark to 30 classes [2], resulting in a new and more challenging benchmark SceneSBR2019 which has 750 2D scene sketches and 3,000 3D scene models. Therefore, the objective of this track is to further evaluate the performance of different 2D scene sketch-based 3D scene model retrieval algorithms using this extended and more comprehensive new benchmark.

Introduction

2D scene sketch-based 3D scene model retrieval is to retrieve human-made 3D scene models given a user's hand-drawn 2D scene sketch. Due to the intuitiveness in sketching, this research topic has vast applications such as 3D scene reconstruction, 3D geometry video retrieval, and 3D AR/VR entertainment. It is a challenging research topic in the field of 3D scene model retrieval due to the semantic gap in their representations: non-realistic 2D scene sketches differ from 3D scene models or their views.

Consequently, existing 3D model retrieval algorithms have mainly focused on single object retrieval and have not handled retrieving such 3D scene content, which involves a lot of new research questions and challenges. This situation was due to two major reasons: 1) there exists a very limited number of available 3D scene shape benchmarks, thus it is challenging to collect a large-scale 3D scene dataset; 2) a big semantic gap exists between the iconic representations of hand-drawn 2D scene sketches and the accurate 3D coordinate representations of 3D scenes. Thus, retrieving 3D scene models using 2D scene sketch queries makes this research direction meaningful, interesting and promising, but challenging as well.

However, as can be seen, SceneSBR2018 contains only 10 distinct scene classes, and this is one of the reasons that all the three deep learning-based participating methods have achieved excellent performance on it. Considering this, after the track we have tripled the size of SceneSBR2018, resulting in an extended benchmark SceneSBR2019, which has 750 2D scene sketches and 3,000 3D scene models. Similarly, all the 2D scene sketches and 3D scene models are equally classified into 30 classes. We have kept the same set of 2D scene sketches and 3D scene models belonging to the initial 10 classes of SceneSBR2018.

Hence, this track seeks participants who will provide new contributions to further advance 2D scene sketch-based 3D scene retrieval for evaluation and comparison, especially in terms of scalability to a larger number of scene categories, based on the new benchmark SceneSBR2019. Similarly, we will also provide corresponding evaluation code for computing a set of performance metrics similar to those used in the Query-by-Model retrieval technique.

Benchmark Overview

Building process. The first thing for the benchmark design is category selection, for which we have referred to several of the most popular 2D/3D scene datasets, such as Places [3] and SUN [4]. The criteria for the category selection is popularity. Finally, we selected the most popular 30 scene classes (including the initial 10 classes in SceneSBR2018) from the 88 available category labels in the Places88 dataset [3], via a voting mechanism among three people (two graduate students as voters and a faculty member as the moderator) based on their judgments. We want to mention that the 88 common scenes are already shared by ImageNet [5], SUN [4], and Places [3]. Then, to collect data (sketches and models) for the additional 20 classes, we gathered from Flicker and Google Image for sketches, and downloaded SketchUp 3D scene models (originally, in ".SKP" format, but we provide ".OBJ" format as well after transformation) from 3D Warehouse [6].

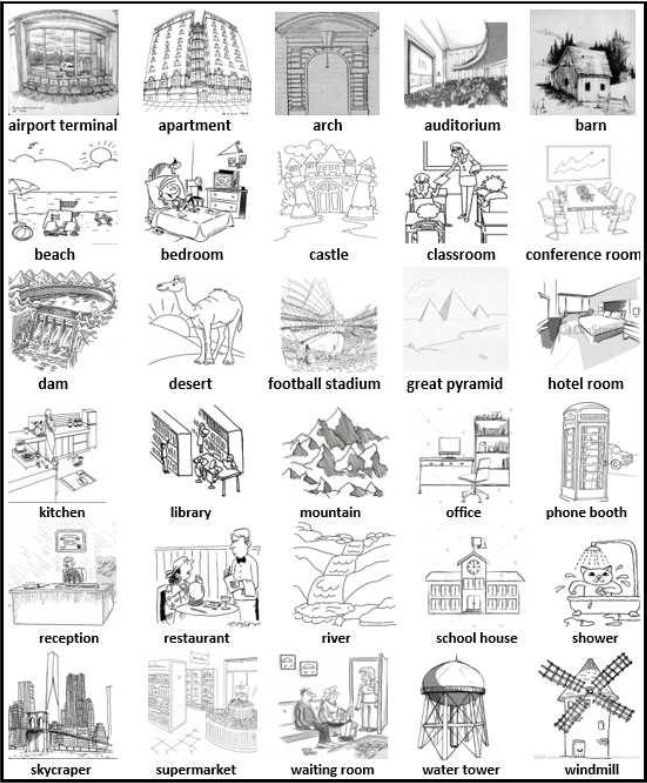

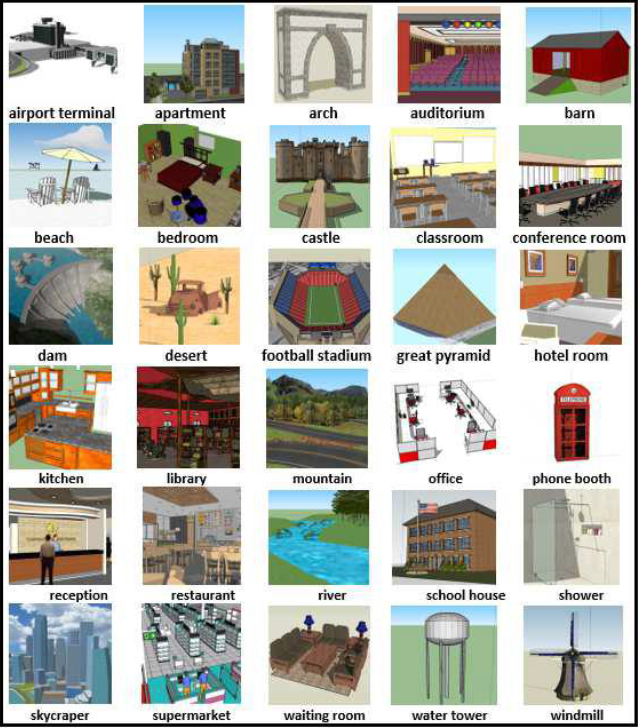

Benchmark Details. Our extended 2D scene sketch-based 3D scene retrieval benchmark SceneSBR2019 expands the initial 10 classes of SceneSBR2018 by adding 20 new classes totaling a more comprehensive dataset of 30 classes. 500 more 2D scene sketches have been added to its 2D scene sketch dataset and 2,000 more SketchUp 3D scene models (".SKP" and ".OBJ" formats) to its 3D scene dataset. Each of the additional 20 classes has the same number of 2D scene sketches (25) and 3D scene models (100), as well. Therefore, SceneSBR2019 contains a complete dataset of 750 2D scene sketches (25 per class) and 3,000 3D scene models (100 per class) across 30 scene categories. Examples for each class are demonstrated in both Fig. 1 and Fig. 2.

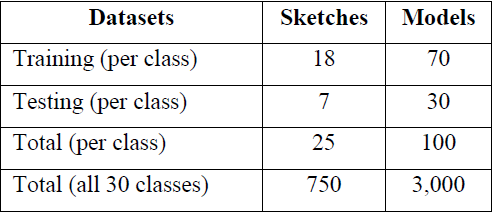

Similar to the SHREC'18 sketch track, we randomly select 18 sketches and 70 models from each class for training and the remaining 7 sketches and 30 models are used for testing, as shown in Table 1. Participants need to submit results on the training and testing datasets, respectively, if they use a learning-based approach. Otherwise, the retrieval results based on the complete (750 sketches, 3000 models) datasets are needed.

2D Scene Sketch Dataset.The 2D scene sketch dataset comprises 750 2D scene sketches (10 classes, each with 25 sketches). One example per class is demonstrated in Fig. 1.

3D Scene Dataset. The 3D scene dataset is built on the selected 3,000 3D scene models downloaded from 3D Warehouse. Each class has 100 3D scene models. One example per class is shown in Fig. 2.

Evaluation Method

To have a comprehensive evaluation of the retrieval algorithm, we employ seven commonly adopted performance metrics in 3D model retrieval community: Precision-Recall (PR) diagram, Nearest Neighbor (NN), First Tier (FT), Second Tier (ST), E-Measures (E), Discounted Cumulated Gain (DCG) and Average Precision (AP) [7]. We have developed the related code to compute these metrics and will provide them to participants.

The Procedural Aspects

The complete dataset will be made available on the 25th of January and the results will be due in six weeks after that. Every participant is expected to perform the queries and send us their retrieval results. We will then do the performance assessment. Participants and organizers will collaborate to write a joint SHREC track competition report to detail the results and evaluations. Results of the track will be presented by one of our organizers during the 2019 Eurographics 3DOR workshop in Genova, Italy.

Procedure

The following list is a step-by-step description of the activities:

- The participants must register by sending a message to Juefei Yuan. Early registration is encouraged, so that we get an impression of the number of participants at an early stage.

- The database will be made available via this website. Dataset.

- Participants will submit the rank lists on the test (for learning-based methods), or on the complete (for non-learning based approaches) datasets. Up to 5 matrices, either for the training or testing datasets, per group may be submitted, resulting from different runs. Each run may be a different algorithm, or a different parameter setting. More information on the dissimilarity matrix file format. More information on the rank list file format.

- Participants write a one-page description of their method with at most two figures and submit it at the same time when they submit their running results.

- The evaluations will be done automatically.

- The organization will release the evaluation scores of all the runs.

- The track results are combined into a joint paper, and then published in the proceedings of the Eurographics Workshop on 3D Object Retrieval after reviewed by the 3DOR and SHREC organizers.

- The description of the track and its results are presented at the 2018 Eurographics Workshop on 3D Object Retrieval (May 5-6, 2019).

Preliminary Timeline

| February 1 | - Call for participation. |

| February 1 | - Distribution of the database. Participants can start the retrieval or train their algorithms. |

| February 18 | - Please register before this date. |

| March 8 | - Submission of the results on the test (for learning-based methods) or the complete (for non-learning based approaches) datasets and one-page description of their method(s). |

| March 11 | - Distribution of relevance judgments and evaluation scores. |

| March 13 | - Track is finished and results are ready for inclusion in a track report. |

| March 15 | - Submit the track report for review. |

| March 25 | - Reviews done, feedback and notifications given. |

| April 5 | - Camera-ready track paper submitted for inclusion in the proceedings. |

| May 5-6 | - Eurographics Workshop on 3D Object Retrieval 2019, featuring SHREC'2019. |

Organizers

Juefei Yuan - University of Southern Mississippi, USA

Hameed Abdul-Rashid - University of Southern Mississippi, USA

Bo Li - University of Southern Mississippi, USA

Yijuan Lu - Texas State University, USA

Tobias Schreck - Graz University of Technology, Austria

References

[1] Juefei Yuan, Bo Li, Yijuan Lu, Song Bai, Xiang Bai, Ngoc-Minh Bui, Minh N. Do, Trong-Le Do, Anh Duc Duong, Xinwei He, Tu-Khiem Le, Wenhui Li, Anan Liu, Xiaolong Liu, Khac-Tuan Nguyen, Vinh-Tiep Nguyen, Weizhi Nie, Van-Tu Ninh, Yuting Su, Vinh Ton-That, Minh-Triet Tran, Shu Xiang, Heyu Zhou, Yang Zhou, Zhichao Zhou. SHREC'18 Track: 2D Sketch-Based 3D Scene Retrieval. 3DOR 2018: 37-44.

[2] Juefei Yuan, Hameed Abdul-Rashid, Bo Li, Yijuan Lu. Sketch/Image-Based 3D Scene Retrieval: Benchmark, Algorithm, Evaluation. The IEEE 2nd International Conference on Multimedia Information Processing and Retrieval (MIPR'19). March 28-30, San Jose, CA, USA (Invited Paper), January 2019, Accepted (PDF, Slides).

[3] B. Zhou, A. Lapedriza, A. Khosla, A. Oliva, and A. Torralba. Places: A 10 million image database for scene recognition. IEEE Trans. Pattern Anal. Mach. Intell., 40(6):1452-1464, 2018.

[4] J. Xiao, K. A. Ehinger, J. Hays, A. Torralba, and A. Oliva. SUN database: Large-scale scene recognition from abbey to zoo. In CVPR, pages 3485-3492. IEEE Computer Society, 2010.

[5] J. Deng, W. Dong, R. Socher, L. Li, K. Li, and F. Li. ImageNet: A large-scale hierarchical image database. CVPR 2009: 248-255.

[6] 3D Warehouse. https://3dwarehouse.sketchup.com/?hl=en.

[7] Bo Li, Yijuan Lu, Chunyuan Li, Afzal Godil, Tobias Schreck, Masaki Aono, Martin Burtscher, Qiang Chen, Nihad Karim Chowdhury, Bin Fang, Hongbo Fu, Takahiko Furuya, Haisheng Li, Jianzhuang Liu, Henry Johan, Ryuichi Kosaka, Hitoshi Koyanagi, Ryutarou Ohbuchi, Atsushi Tatsuma, Yajuan Wan, Chaoli Zhang, Changqing Zou. A comparison of 3D shape retrieval methods based on a large-scale benchmark supporting multimodal queries. Computer Vision and Image Understanding, 131:127, 2015.

Please cite the paper:

[1] Juefei Yuan, Hameed Abdul-Rashid, Bo Li, Yijuan Lu, Tobias Schreck, Song Bai, Xiang Bai, Ngoc-Minh Bui, Minh N. Do, Trong-Le Do, Anh-Duc Duong, Kai He, Xinwei He, Mike Holenderski, Dmitri Jarnikov, Tu-Khiem Le, Wenhui Li, Anan Liu, Xiaolong Liu, Vlado Menkovski, Khac-Tuan Nguyen, Thanh-An Nguyen, Vinh-Tiep Nguyen, Weizhi Nie, Van-Tu Ninh, Perez Rey, Yuting Su, Vinh Ton-That, Minh-Triet Tran, Tianyang Wang, Shu Xiang, Shandian Zhe, Heyu Zhou, Yang Zhou, Zhichao Zhou. A Comparison of Methods for 3D Scene Shape Retrieval. Computer Vision and Image Understanding, Vol. 201, December, 2020.

[2] Juefei Yuan, Hameed Abdul-Rashid, Bo Li, Yijuan Lu, Tobias Schreck, Ngoc-Minh Bui, Trong-Le Do,Khac-Tuan Nguyen, Thanh-An Nguyen, Vinh-Tiep Nguyen, Minh-Triet Tran, Tianyang Wang. In: S. Biasotti, G. Lavoue, B. Falcidieno, and I. Pratikakis and R.C. Veltkamp (eds.), SHREC'19 Track: Extended 2D Scene Sketch-Based 3D Scene Retrieval, Eurographics Workshop on 3D Object Retrieval 2019 (3DOR 2019), Genova, Italy, May 5-6, 2019 (PDF, Slides, BibTex)